Transforming Data With Malli and Meander

PrologueLink to Prologue

Malli is a high-performance, data-driven data specification library for Clojure. It supports both runtime data validation and static type linting via tools like clj-kondo and core.typed. This is the fourth post on Malli, focusing on data-driven value transformations with the help of Meander. Older posts include:

- Malli, Data-Driven Schemas for Clojure/Script

- Structure and Interpretation of Malli Regex Schemas

- High-Performance Schemas in Clojure/Script with Malli 1/2

EDIT: 13.1.2024 - removed custom coercer as it's part of malli nowadays.

Data TransformationLink to Data Transformation

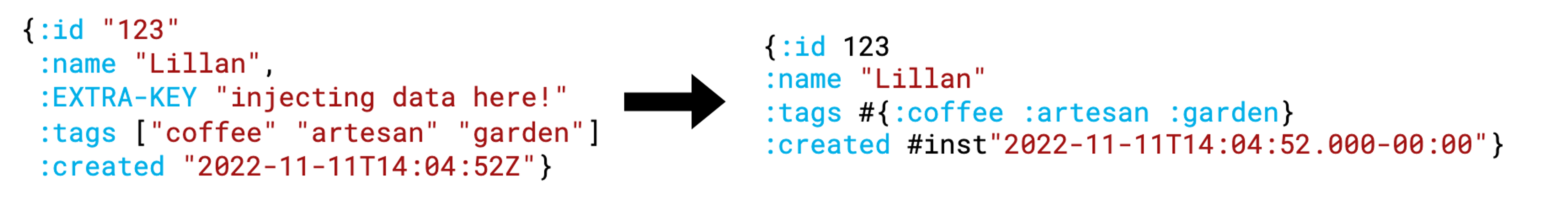

When working with real-life interconnected systems, we are transforming data between different Sources and Targets. You could be reading 3rd party JSON or GraphQL APIs, Legacy XML endpoints, databases and messages from queues in various formats into your target domain models.

In the simplest form, data is transformed via Type Coercion. Malli has a fast and extendable engine for both decoding and encoding values based on schema type and properties. It's covered in the earlier posts, so not going to go into details now.

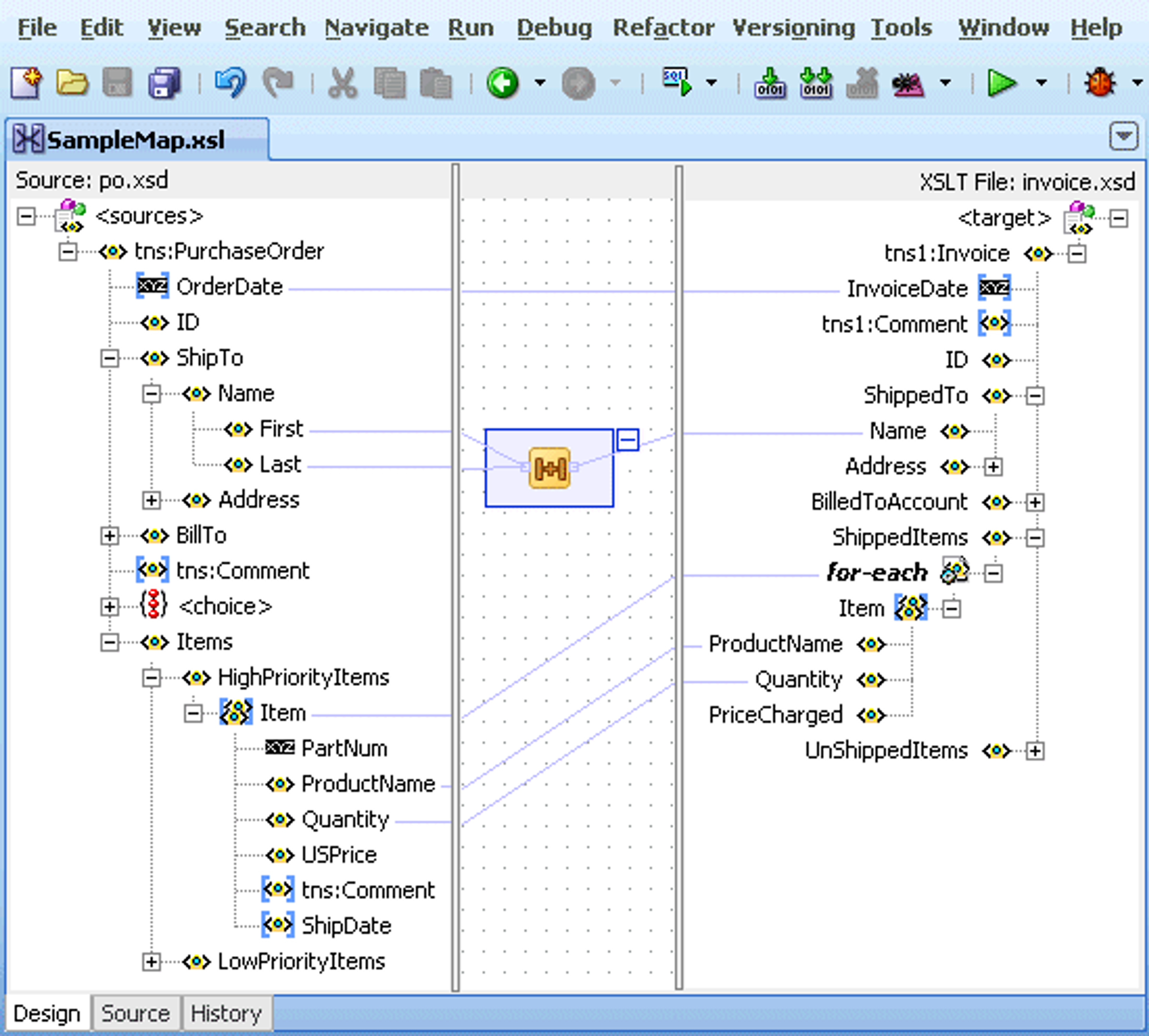

The second form is transforming different shapes of data, for example reading a flat row from a CSV-file and converting that into domain data with deeply nested values. In the early days of my career, XSLT was the king and there were a lot of commercial tools for doing graphical mapping of the data forms using it.

Nowadays, most cloud providers offer their own serverless dataflow glue factories and there are also good open source solutions available.

But wouldn't it be nice if we could just use our favourite programming languages for the job?

ClojureLink to Clojure

Clojure is our go-to tool for modern cloud development, and it's a great language for working with data. The core library has a lot of tools for transforming maps, sequences and values, while retaining the immutable data semantics.

(-> {:id "1"}

(update :id parse-long)

(assoc :name "Elegia")

(update :tags (fnil conj #{}) "poem"))

; => {:id 1, :name "Elegia", :tags #{"poem"}}

For advanced data processing, you can use libraries like tech.ml.dataset and scicloj.ml.

(require '[tech.v3.dataset :as ds])

(def orders (ds/->dataset "orders.csv" {:key-fn keyword, :parser-fn :string}))

orders

;| :id | :firstName | :lastName | :street | :item1 | :item2 | :zip |

;|----:|------------|-----------|-----------------|--------|--------|-------|

;| 1 | Sauli | Niinistö | Mariankatu 2 | coffee | buns | 00170 |

;| 2 | Sanna | Marin | Kesärannantie 1 | juice | pasta | 00250 |

(dissoc orders :zip :street)

;| :id | :firstName | :lastName | :item1 | :item2 |

;|----:|------------|-----------|--------|--------|

;| 1 | Sauli | Niinistö | coffee | buns |

;| 2 | Sanna | Marin | juice | pasta |

Transforming data programmatically is great, but we don't have to stop there. We can describe also the data models and data transformations as data and write an interpreter or compiler for it. Being able to modify the models and processes like the data itself enables us to build truly dynamic systems.

Data, you say? Let's add Malli and Meander to the mix.

The PipelineLink to The Pipeline

We are building an imaginary service to reduce food waste by consolidating food orders from various systems into a single food delivery system. One of the sources is a CSV-file.

Our target Malli schema looks like this:

(def Order

[:map {:db/table "Orders"}

[:id :uuid]

[:source [:enum "csv" "online"]]

[:source-id :string]

[:name {:optional true} :string]

[:items [:vector :keyword]]

[:delivered {:default false} :boolean]

[:address [:map

[:street :string]

[:zip :string]]]])

Example order:

(require '[malli.generator :as mg])

(mg/generate Order {:seed 3})

;{:id #uuid"b36c2541-2db8-4d75-b87d-3413bdacdb7d",

; :source "online",

; :source-id "",

; :items [:y!Aw11EA :PUPjb-_T :DPXc!g:e],

; :delivered true,

; :address {:street "MG7rxPm6jywJSPqEs"

; :zip "116iS2c74JGKv90oAhJP7aq7iL8iyk"}}

ExtractLink to Extract

We can start by inferring the source schema from samples:

(require '[malli.provider :as mp])

(def CSVOrder (mp/provide (ds/rows orders)))

CSVOrder

;[:map

; [:id :string]

; [:firstName :string]

; [:lastName :string]

; [:street :string]

; [:item1 :string]

; [:item2 :string]

; [:zip :string]]

Validating the result:

(require '[malli.core :as m])

(->> (ds/rows orders)

(map (m/validator CSVOrder))

(every? true?))

; => true

Loading and validating the data, no transformation needed:

(defn load-csv [file]

(ds/rows (ds/->dataset file {:key-fn keyword, :parser-fn :string})))

(require '[malli.transform :as mt])

(def validate-input (m/coercer CSVOrder))

Testing the data pipeline so far:

(->> (load-csv "orders.csv")

(map validate-input))

;({:id "1",

; :firstName "Sauli",

; :lastName "Niinistö",

; :street "Mariankatu 2",

; :item1 "coffee",

; :item2 "buns",

; :zip "00170"}

; {:id "2",

; :firstName "Sanna",

; :lastName "Marin",

; :street "Kesärannantie 1",

; :item1 "juice",

; :item2 "pasta",

; :zip "00250"})

TransformLink to Transform

We are using Meander to build the transformations. Meander is a great library for creating transparent data transformations in Clojure.

First we build a little helper that allows us to define the Meander patterns and expressions as data, to be compiled later using eval. I'll later describe why this is important.

(require '[meander.match.epsilon :as mme])

(defn matcher [{:keys [pattern expression]}]

(eval `(fn [data#]

(let [~'data data#]

~(mme/compile-match-args

(list 'data pattern expression)

nil)))))

We define a pattern with logic variables (?id, !items etc.) to match the source data and expression to create the target data from the matched variables.

(def transform

(matcher

{:pattern '{:id ?id

:firstName ?firstName

:lastName ?lastName

:street ?street

:item1 !item

:item2 !item

:zip ?zip}

:expression '{:id (random-uuid)

:source "csv"

:source-id ?id

:name (str ?firstName " " ?lastName)

:items !item

:address {:street ?street

:zip ?zip}}}))

We are changing the shape of the data:

- adding the

:idand:sourcefields - mapping

:idto:source-id - concatenating

:name - collecting the

:itemsvector - creating a submap

:addresswith:streetand:zip

Adding the transformation into our pipeline:

(->> (load-csv "orders.csv")

(map validate-input)

(map transform))

;({:id #uuid"7f765cf9-24a2-4bd8-950f-35f6c8724c65",

; :source "csv",

; :source-id "1",

; :name "Sauli Niinistö",

; :items #{"buns" "coffee"},

; :address {:street "Mariankatu 2", :zip "00170"}}

; {:id #uuid"3fc14e81-170f-4234-983a-9d9cc2a47ed5",

; :source "csv",

; :source-id "2",

; :name "Sanna Marin",

; :items #{"pasta" "juice"},

; :address {:street "Kesärannantie 1", :zip "00250"}})

We are almost there.

ConformLink to Conform

Last step is to coerce and validate the data against our target schema. For this, we compose a Malli transformer:

(def validate-output

(m/coercer

Order

(mt/transformer

(mt/string-transformer)

(mt/default-value-transformer))))

Our final transformation pipeline:

(->> (load-csv "orders.csv")

(map validate-input)

(map transform)

(map validate-output))

;({:id #uuid"a3fae918-9b5a-4f54-9d32-4c22b74e8922",

; :source "csv",

; :source-id "1",

; :name "Sauli Niinistö",

; :items #{:buns :coffee},

; :address {:street "Mariankatu 2", :zip "00170"},

; :delivered false}

; {:id #uuid"5f4cb486-6990-457b-8fca-bebc25b23277",

; :source "csv",

; :source-id "2",

; :name "Sanna Marin",

; :items #{:pasta :juice},

; :address {:street "Kesärannantie 1", :zip "00250"},

; :delivered false})

:itemsare now coerced into keywords:deliveredkey was set to the default value

The pipeline didn't throw an exception, so the data is valid. We are done.

It's all dataLink to It's all data

We have defined both the data models (Malli Schemas) and the transformations (Meander Patterns and Expressions) as data. Let's now extract these into a separate transformation map:

(def transformation

{:registry {:csv/order [:map

[:id :string]

[:firstName :string]

[:lastName :string]

[:street :string]

[:item1 :string]

[:item2 :string]

[:zip :string]]

:domain/order [:map {:db/table "Orders"}

[:id :uuid]

[:source [:enum "csv" "online"]]

[:source-id :string]

[:name {:optional true} :string]

[:items [:vector :keyword]]

[:delivered {:default false} :boolean]

[:address [:map

[:street :string]

[:zip :string]]]]}

:mappings {:source :csv/order

:target :domain/order

:pattern '{:id ?id

:firstName ?firstName

:lastName ?lastName

:street ?street

:item1 !item

:item2 !item

:zip ?zip}

:expression '{:id (random-uuid)

:source "csv"

:source-id ?id

:name (str ?firstName " " ?lastName)

:items !item

:address {:street ?street

:zip ?zip}}}})

As it's just data, we can serialize it as EDN or Transit over the wire, inspect and edit it on the browser, send it back and store it in the database.

(require '[clojure.edn :as edn])

(-> transformation

(pr-str)

(edn/read-string)

(= transformation))

; => true

Note: As we use eval to compile the Meander definitions, we should sandbox the execution or use tools like SCI if we are reading the definitions from untrusted sources.

Function to compile the transformation data structure into a Clojure function:

(defn transformer [{:keys [registry mappings]} source-transformer target-transformer]

(let [{:keys [source target]} mappings

xf (comp (map (coercer (get registry source) source-transformer))

(map (matcher mappings))

(map (coercer (get registry target) target-transformer)))]

(fn [data] (into [] xf data))))

Transformation pipeline:

(def pipeline

(transformer

transformation

nil

(mt/transformer

(mt/string-transformer)

(mt/default-value-transformer))))

Note: It's not yet fully declarative as we are passing source & target transformers into the transformer function, but that would be easy to fix.

Trying it out:

(pipeline (load-csv "orders.csv"))

;({:id #uuid"e809652f-8399-467e-9bab-a0e2bba1548e",

; :source "csv",

; :source-id "1",

; :name "Sauli Niinistö",

; :items #{:buns :coffee},

; :address {:street "Mariankatu 2", :zip "00170"},

; :delivered false}

; {:id #uuid"fc255810-7235-43ba-93bb-e5abc7c78168",

; :source "csv",

; :source-id "2",

; :name "Sanna Marin",

; :items #{:pasta :juice},

; :address {:street "Kesärannantie 1", :zip "00250"},

; :delivered false})

It works. We only needed matcher, transform and pipeline functions to turn our data into a transformation pipeline. That's around 20 lines of code which all are generic functions.

PerformanceLink to Performance

Let's compare the performance of our data-driven approach to just writing a simple transformation function in Clojure (without any validation).

(defn clojure-pipeline [x]

(mapv (fn [{:keys [id firstName lastName street item1 item2 zip]}]

{:id (random-uuid)

:source "csv"

:source-id id

:name (str firstName " " lastName)

:items [(keyword item1) (keyword item2)]

:address {:street street

:zip zip}

:delivered false}) x))

Loading 1000 random users for the test:

(require '[hato.client :as hc])

(def data

(let [!id (atom 0)

rand-item #(rand-nth ["bun" "coffee" "pasta" "juice"])]

(->> (hc/get "https://randomuser.me/api/"

{:query-params {:results 1000, :seed 123}

:as :json})

:body

:results

(map (fn [user]

{:id (str (swap! !id inc))

:firstName (-> user :name :first)

:lastName (-> user :name :last)

:street (str (-> user :location :street :name) " "

(-> user :location :street :number))

:item1 (rand-item)

:item2 (rand-item)

:zip (-> user :location :postcode str)})))))

(take 2 data)

;({:id "1"

; :firstName "Heldo"

; :lastName "Campos"

; :street "Rua Três 9120"

; :item1 "bun"

; :item2 "juice"

; :zip "73921"}

; {:id "2",

; :firstName "Candice",

; :lastName "Long",

; :street "Northaven Rd 4744",

; :item1 "pasta",

; :item2 "coffee",

; :zip "25478"})

Benchmarking with Criterium:

(require '[criterium.core :as cc])

;; 480µs

(cc/quick-bench (clojure-pipeline data))

;; 840µs

(cc/quick-bench (pipeline data))

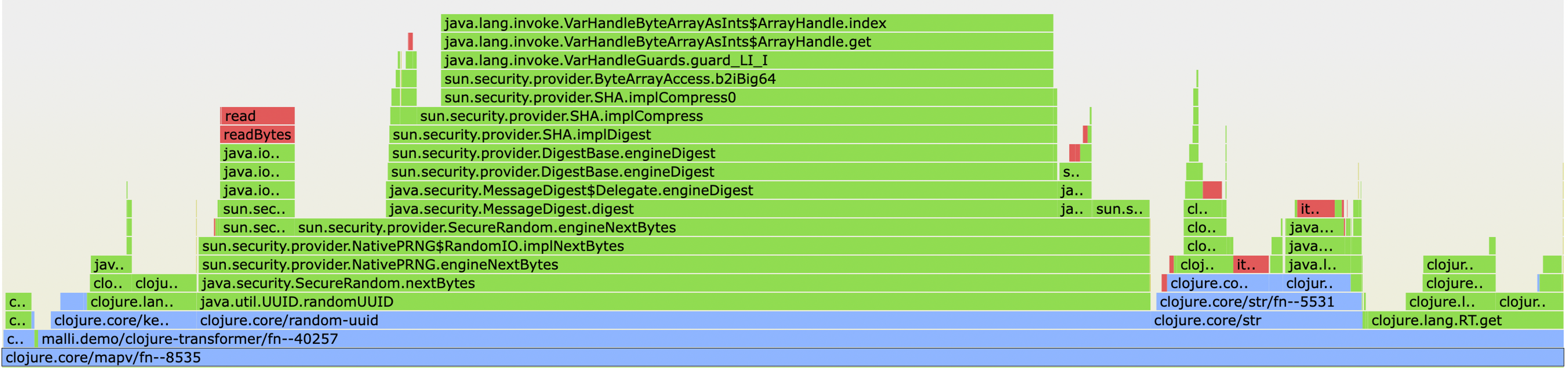

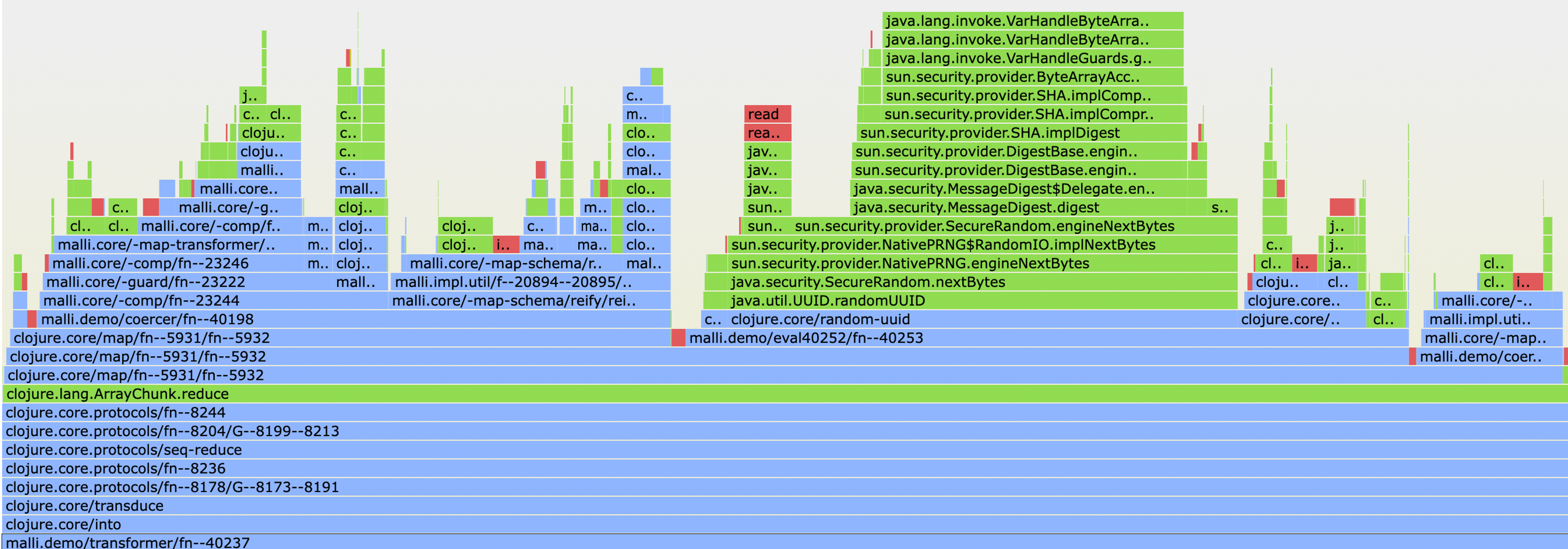

Our data-driven pipeline is about 2x slower in this test, but it validates both the input & output schemas, while the pure Clojure implementation is doing no validation. Flamegraphs below:

VisualizationLink to Visualization

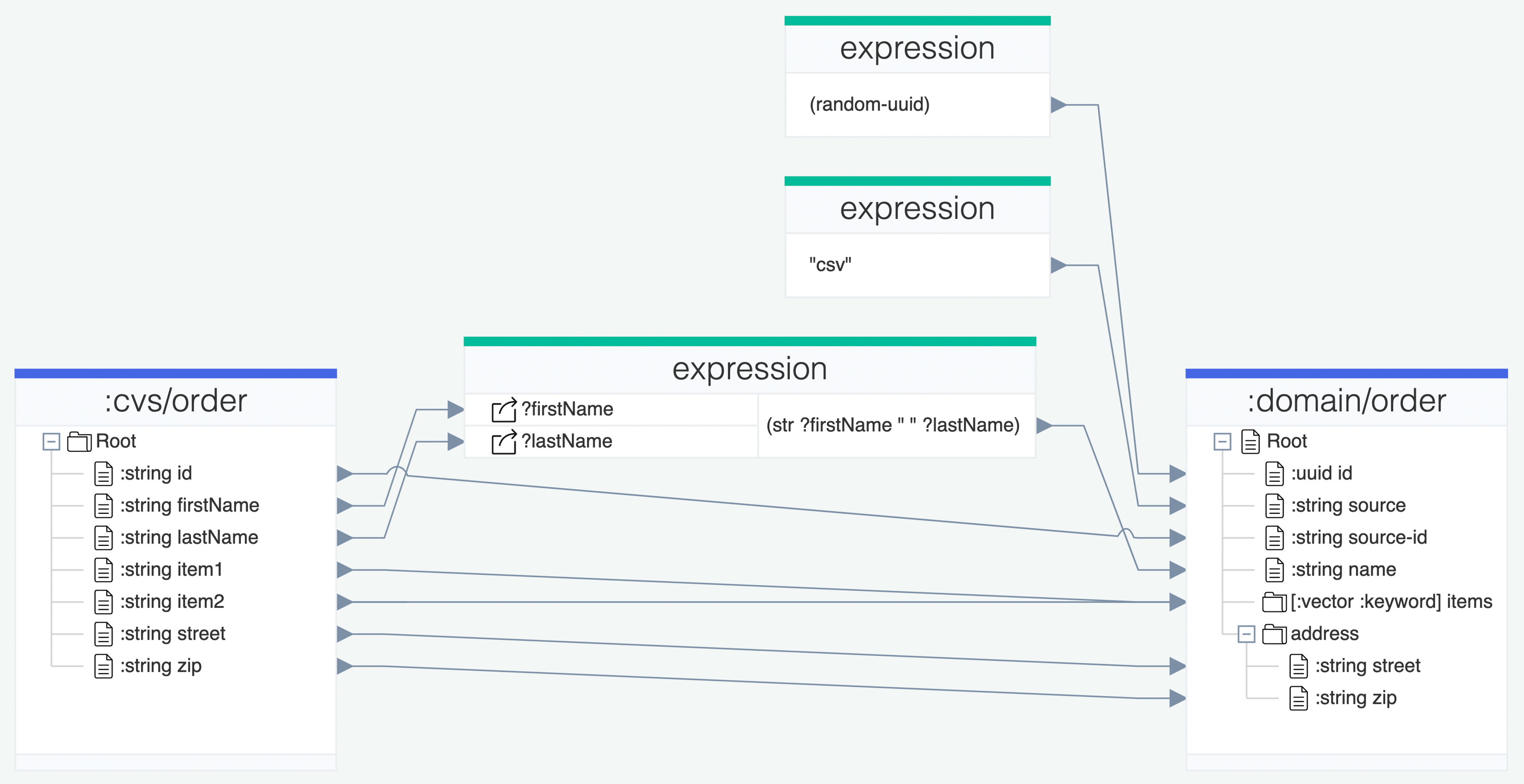

Another benefit of using a data-oriented approach is that we can visualize or even create visual editors for it. Our transformation visualized:

EpilogueLink to Epilogue

Clojure is a great language for writing data transformations and for simple cases, that's all we need. But to ensure data correctness, we should also validate data at the borders. Malli is a tool for defining the data schemas and validating & coercing the data. Meander is a data-driven transformation tool that complements Malli. Together they enable fully data-driven transformation pipelines.

Being able to describe systems as data allows us to blur the boundaries between development time and runtime. We can inspect, visualize and edit the system at runtime on the browser, send it over the wire and store it in a database. With the right tools, we can compile applications from the system without sacrificing any runtime performance. With Malli, we can also generate sample data from schemas and use generative testing to verify the transformations logic. We can also pull development-time schemas from runtime data, enabling static type-linting on dynamic schemas. I'll write another post about it.

If Clojure, Malli or building dynamic data-driven systems got you curious, drop me an email and lets talk. Or join Clojurians Slack and find me (@ikitommi) there.

And yes, it's all Open Source.